App Engine generates usage reports to help you understand your application's performance and the resouces your application is using. Based on these reports, you can employ the strategies listed below to manage your resources. After making any changes, you will see that information reflected in subsequent usage reports. For more information, please see our Billing FAQ and pricing page.

Viewing Your Usage Reports

Daily usage reports for App Engine can be found in the Admin Console on the Billing History page, located at:

https://appengine.google.com/billing/history?&app;_id=$APP_ID

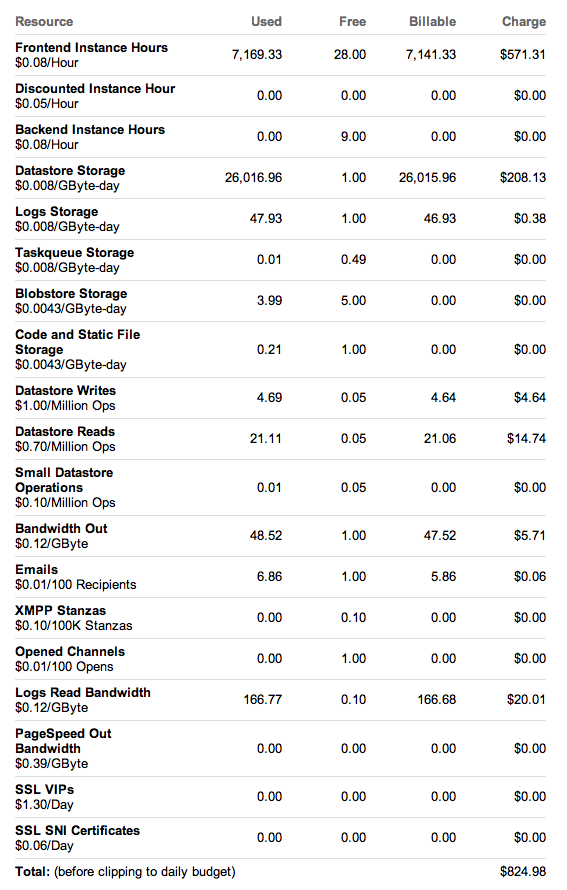

. If you click on the [+] sign beside one of the reports, this will expand one day's details so you can see resources. Here's what a usage report might look like for your application:

Now we'll go through the line items and explain what they mean, suggest some strategies you can use to manage resources, and explain what these strategies could mean for your application's performance.

Managing Instance Usage

The first two line items on the report deal with application instance usage. You can

read about instances

in our documentation. You can also see the number of instances that are being used to serve your application in the Admin Console at

https://appengine.google.com/instances?&app;_id=$APP_ID

, or by selecting the "Instances" graph from the dropdown on your application's Dashboard at

https://appengine.google.com/dashboard?&app;_id=$APP_ID

.

App Engine’s Scheduler

App Engine uses a scheduling algorithm to decide how many instances are necessary to serve the amount of traffic your application is receiving. With each request that your application receives we make a decision to serve it with an available instance (either one that is idle or accepts concurrent requests), put the request in a pending request queue, or start a new instance for that request. We make this decision by looking at your available instances, how quickly your application has been serving requests recently (its latency), and how long it takes for a new instance of your application to initialize and start serving requests. In most cases, when we think we can serve a request quicker with a new instance than with an existing instance, we start up a new instance to serve the incoming requests.

Of course, an application's traffic is never steady, so the scheduler also keeps track of the number of idle instances for your app. These idle instances can be useful for serving spikes in traffic without user-visible latency. If the scheduler determines that your application has too many idle instances, it will reclaim resources by tearing down some of the unused instances.

Strategies for Decreasing the Number of Instances Your App Uses

Decrease Latency

Since the latency of your application has a huge impact on how many instances are required to handle your traffic, decreasing application latency can have a large effect on how many instances we use to serve your application. Here are a few things you can do to decrease application latency:

- Increase caching of frequently accessed shared data - That’s another way of saying - use Memcache. Also, if you set your application’s cache-control headers, this can have a big impact on how efficiently your data is cached by servers and browsers. Even caching things for a few seconds can have a big impact on how efficiently your application serves traffic. Python applications should also make use of caching in the runtime as well.

- Use Memcache more efficiently - Use batch calls for get, set, delete, etc instead of a series of individual calls. Where appropriate, consider using the Memcache Async API ( Java , Python ).

- Use Tasks for non-request bound functionality - If your application performs work that can be done outside of the scope of a user-facing request, put it in a task! Sending this work to the Task Queue instead of waiting for it to complete before returning a response can significantly reduce user-facing latency. The Task Queue can then give you much more control over execution rates and help smooth out your load.

- Use the datastore more efficiently - We go in to more detail for this below.

-

Parallelize multiple URL Fetch calls

- Use the async URL Fetch API ( Java , Python ).

- Use goroutines (Go).

- Batch together multiple URL Fetch calls (which you might handle individually inside individual user facing requests) and handle them in an offline task in parallel via async URL Fetch.

-

For Java HTTP sessions, write asynchronously

- HTTP sessions (

Java

) lets you configure your application to asynchronously write http session data to the datastore by adding

<async-session-persistence enabled="true"/>to yourappengine-web.xml. Session data is always written synchronously to memcache, and if a request tries to read the session data when memcache is not available it will fail over to the datastore, which may not yet have the most recent update. This means there is a small risk your application will see stale session data, but for most applications the latency benefit far outweighs the risk.

Manually Adjust the Scheduler in the Admin Console

In the Admin Console's Application Settings page, we have two sliders available that let you set some of the variables that the scheduler uses to manage your application's instances. Here's a brief explanation of how you can use these to have more control over the trade-off between performance and resource usage for your application:

- Lower Max Idle Instances - The Max Idle Instances setting allows you to control the maximum number of idle instances available to your application. Setting this limit instructs App Engine to shut down any idle instances above that limit so that they do not consume additional quota or incur any charge. However, fewer idle instances also means that the App Engine Scheduler may have to spin up new instances if you experience a spike in traffic -- potentially increasing user-visible latency for your app.

- Raise Min Pending Latency - Raising Min Pending Latency instructs App Engine’s scheduler to not start a new instance unless a request has been pending for more than the specified time. If all instances are busy, user-facing requests may have to wait in the pending queue until this threshold is reached. Setting a high value for this setting will require fewer instances to be started, but may result in high user-visible latency during increased load.

Enable Concurrent Requests in Java

In our 1.4.3 release , we introduced the ability for your application's instances to serve multiple requests concurrently in Java. Enabling this setting will decrease the number of instances needed to serve traffic for your application, but your application must be threadsafe in order for this to work correctly. Read about how to enable concurrent requests in our Java documentation .

Enable Concurrent Requests in Python

In Python 2.7 , we introduced the ability for your application's instances to serve multiple requests concurrently in Python. Enabling this setting will decrease the number of instances needed to serve traffic for your application, but your application must be threadsafe in order for this to work correctly. Read about how to enable concurrent requests in our Python documentation . Concurrent requests are not available in the Python 2.5 runtime.

Note: All Go instances have concurrent requests enabled automatically.

Configuring TaskQueue settings

The default settings for the Task Queue are tuned for performance. With these defaults, when you put several tasks into a queue simultaneously, they will likely cause new Frontend Instances to spin up. Here are some suggestions for how to tune the Task Queue to conserve Instance Hours:

- Set the X-AppEngine-FailFast header on tasks that are not latency sensitive. This header instructs the Scheduler to immediately fail the request if an existing instance is not available. The Task Queue will retry and back-off until an existing instance becomes available to service the request. However, it is important to note that when requests with X-AppEngine-FailFast set occupy existing instances, requests without that header set may still cause new instances to be started.

-

Configure your Task Queue's settings(

Java

,

Python

).

- If you set the "rate" parameter to a lower value, Task Queue will execute your tasks at a slower rate.

- If you set the "max_concurrent_requests" parameter to a lower value, fewer tasks will be executed simultaneously.

- Use backends( Java , Python ) in order to completely control the number of instances used for task execution. You can use push queues with dynamic backends, or pull queues with resident backends.

Serve static content where possible

Static content serving ( Java , Python ) is handled by specialized App Engine infrastructure, which does not consume Instance Hours.

If you need to set custom headers, use the Blobstore API ( Java , Python , Go ). The actual serving of the Blob response does not consume Instance Hours.

Managing Application Storage

App Engine calculates storage costs based on the size of entities in the datastore, the size of datastore indexes, the size of tasks in the task queue, and the amount of data stored in Blobstore. Here are some things you can do to make sure you don't store more data than necessary:

- Delete any entities or blobs your application no longer needs.

- Remove any unnecessary indexes, as discussed in the Managing Datastore Usage section below, to reduce index storage costs.

Managing Datastore Usage

App Engine accounts for the number of operations performed in the Datastore. Here are a few strategies that can result in reduced Datastore resource consumption, as well as lower latency for requests to the datastore:

- The dev console dataviewer displays the number of write ops that were required to create every entity in your local datastore. You can use this information to understand the cost of writing each entity. See Understanding Write Costs for information on how to interpret this data.

-

Remove any unnecessary indexes, which will reduce storage and entity write costs. Use the "Get Indexes" functionality (

Java

,

Python

) to see what indexes are defined on your application. You can also see what indexes are currently serving for your application in the Admin Console at:

https://appengine.google.com/datastore/indexes?&app;_id=$APP_ID. - When designing your data model, you may be able to write your queries in such a way so as to avoid custom indexes altogether. Read our Queries and Indexes documentation for more information on how App Engine generates indexes.

- Whenever possible, replace indexed properties (which are the default) with unindexed properties ( Java , Python ), which reduces the number of datastore write operations when you put an entity. Caution, if you later decide that you do need to be able to query on the unindexed property, you will need to not only modify your code to again use indexed properties, but you will have to run a map reduce over all entities to reput them.

- Due to the datastore query planner improvements in the App Engine 1.5.2 and 1.5.3 releases ( Java , Python ), your queries may now require fewer indexes than they did previously. While you may still choose to keep certain custom indexes for performance reasons, you may be able to delete others, reducing storage and entity write costs.

- Reconfigure your data model so that you can replace queries with fetch by key ( Java , Python , Go ), which is cheaper and more efficient.

- Use keys-only queries instead of entity queries when possible.

-

To decrease latency, replace multiple entity

get()s with a batchget(). - Use datastore cursors for pagination rather than offset.

- Parallelize multiple datastore RPCs via the async datastore API ( Java , Python ), or goroutines (Go).

Note: Small datastore operations include calls to allocate datastore ids or keys-only queries. See the pricing page for more information on costs.

Managing Bandwidth

For Outgoing Bandwidth, one way to reduce usage is to, whenever possible, set the appropriate

Cache-Control

header on your responses and set reasonable expiration times (

Java

,

Python

) for static files. Using public

Cache-Control

headers in this way will allow proxy servers and your clients' browser to cache responses for the designated period of time.

Incoming Bandwidth is more difficult to control, since that's the amount of data your users are sending to your app. However, this is a good opportunity to mention our DoS Protection Service for Python and Java , which allows you block traffic from IPs that you consider abusive.

Managing Other Resources

The last items on the report are the usages for the Email, XMPP, and Channel APIs. For these APIs, your best bet is to make sure you are using them effectively. One of the best strategies for auditing your usage of these APIs is to use Appstats ( Python , Java ) to make sure you're not making more calls than are necessary. Also, it's always a good idea to make sure you are checking your error rates and looking out for any invalid calls you might be making. In some cases it might be possible to catch those calls early.